Games with human opponents often present opportunities for ruse. In sports, such as soccer, football, or badminton there are misdirecting ruses: one pretends one’s immediate goals are in a different place in order to relocate the opponents’ focus. So if you want to experience the fun firsthand, then you should consider purchasing Arsenal away tickets.

In card games, such as poker or gin, there are ruses of weakness and strength, where one deceives opponents through tells and bluffs. In all of these games, ruse is considered part of play.

Often where there would be stalemates, ruses generate victory and defeat. In contrast, there are also games of trust, such as the folk game, Leading the Blind, where one must move blindfolded guided by a partner whom we believe, or Yoko Ono’s Play it by Trust (1966), where all Chess pieces are painted white.

Trust also plays an important part of Bridge because players are grouped in pairs and asked to communicate to one another solely through an abstract bidding system. You must be confident that one’s partner is not (or in some cases is) making mistakes in order to respond to their bids. A low-level example of this is the bidding convention known as “Stayman,” where one says “two clubs” in response to a partner’s bid of “one no-trump” to indicate strength in hearts and spades. If a player correctly bids Stayman and their partner does not trust them to have remembered what the “two club” response means, but rather assumes they meant to indicate strength in clubs, miscommunication will occur and cause the downfall of the team. In Tournament Bridge, ruses are juxtaposed against this trust and are an important part of play, to the point where certain feints are forbidden. For instance, whereas it is permissible to play a normally inappropriate card because it will fool one’s opponents, one cannot do things like pause to think when no obvious strategy presents itself. Doing the latter is dubbed “coffeehousing,” relating to old-world coffee houses where this behavior was permitted. Should someone think for too long about making a certain play and then be discovered to have had only one option, that person may be called out and have points deducted from his or her score.1

Ruse and trust are thus socially determined and regulated tacitly by the players, if not explicitly by the rules. Game theorists have studied ruse extensively, particularly with regards to the Prisoner’s Dilemma, in order to consider optimization and social structure.2 However, in this essay, I take a very different tack and explore designed opportunities for ruse as crafted narrative tools.

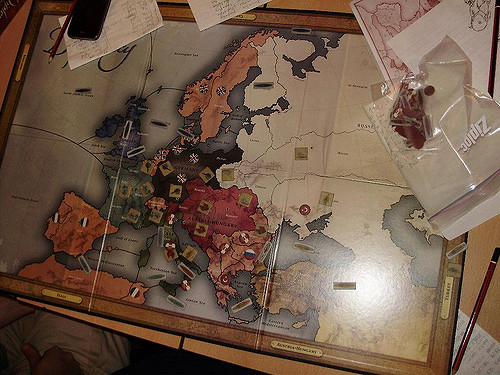

Bridge combines trust and ruse, which exist between ally and opponent respectively, but what interest me are games in which trust and ruse intermingle between the same players. Such games of player unpredictability have their participants cooperate to reach conflicting goals. Examples of these include: Diplomacy (Calhamer 2008); A Game of Thrones (Peterson and Wilson 2003); Werewolf (Davidoff 1986, Plotkin 1997); Battlestar Galactica (Konieczka 2008); and Shadows over Camelot (Cathala and Laget 2005). These are all non-digital games that, as a result of deploying rules surrounding trust and ruse, create emergent fictions of betrayal. Such fictions of betrayal are noteworthy because they are generated bottom-up by players following a rule set.

Unlike novels, theatre or film, these games offer up unscripted moments of play in fictional worlds that communicate ideas (in these cases, ideas about betrayal). Their depiction of betrayal is magnified by the fact they are adapted from historical events, stories and folk tales, which themselves are understood as depicting betrayal. For instance, in the TV series Battlestar Galactica (2003–2004), the human race is betrayed by a machine that is virtually indistinguishable from a person. The show’s premise is that Cylons secretly continue to live amongst the few humans remaining, garnering trust, while waiting to make their final move. Instead of recounting these kinds of betrayals through pre-designed narrated stories, games of ruse, trust, and betrayal incentivize their players – and consequently their avatars – to betray each other within the confines of a game space. Celia Pearce, who has written on the topic of performing and productive players, explains: “It is clear that some fictional genres lend themselves to interactivity better than others. The key to success seems to be the appropriate pairing of genre with play mechanic.”3 Pearce suggests that not all stories are told well through games, and those that are must have a proper set of rules to match their fiction. Pearce argues for a productive player who can be considered as a co-author in the creation of a narrative. Instead, I argue nearly the opposite: I suggest that there is an emergent fiction crafted by the game designer. The players in these specific games of ruse, trust and betrayal (henceforth RTBs) create a fiction in an altogether different way than authors do. They pursue goals at the behest of a designer, following that designer’s rules, and procedurally generate a fiction in parallel. To demonstrate this argumentation, I will go over three rules that game designers have historically put in place to procedurally generate betrayal, once I have defined several terms of note.

Game Ruse

In games, the line between a ruse and a cheat is not always clear, perhaps because ruses can bend rules to their breaking point in order to continue being effective. What is more, ruses in games are not the same as ruses simpliciter. For instance, ruses normally have no restrictions as to their purpose. One could create an altruistic ruse that would have a baby try a new vegetable, a witness to testify in front of a judge, or a business person accept to give half of their fortune away. However, a game ruse can only serve the interests of the player. Borrowing from Bernard Suits, “to play a game is to achieve a specific state of affairs [prelusory goal], using only means permitted by rules [lusory means], where the rules prohibit use of more efficient in favor of less efficient means [constitutive rules], and where the rules are accepted just because they make possible such activity [lusory attitude].”4. One cannot be said to be playing in the spirit of the game (with lusory attitude) if one is deceiving opponents into improving their positions, because one must necessarily attempt to reach a specific state of affairs (the prelusory goal). To do anything that is contrary to achieving the desired state of affairs is akin to ceasing the play of the game.

To make this more explicit, consider poker, in which one must accumulate the most money possible. If Alex were to attempt to deceive Beth into gaining Alex’s own money without any ulterior motive (such as trying to keep Beth in the game because Alex is certain that he can beat Beth at a later date and hopefully between now and then Beth will grow her money with that of a third opponent Chrissy, which Alex cannot read so well) then clearly Alex is not playing poker (although he may be playing something that looks like it). There are, however, cases where one might deceive an ally into improving their position. But this only makes sense if helping one’s ally furthers one’s plans to reach a desired state of affairs prescribed by the game. Ruses which deceive one’s allies are somewhat anomalous, given that it is normally easier to convince an ally to make a proper move through truth and reason. Cases where these might occur would likely involve either the deceiver or the deceived to be considered wrong and stubborn by their ally in question. For it is usually after failing to find a consensus about the proper course of action that one will begin to manipulate one’s allies.

Setting aside these strange cases, for now and for my purposes I will limit the term “ruse” to mean an intentional act which communicates something about the game-state in order to induce an opponent to worsen her position. This definition does not mention whether the ruse is sanctioned by the rules, which intuitively makes sense, given that certain ruses can only be the results of cheating. Nevertheless, I will continue to discuss only fair ruses, ones which do not break game rules, given that this is a study of how rules build fictions. My definition above specifies that a ruse relate to the game-state in order to avoid discussing ruses that operate on a meta-gamic level, such as lying about the rules or about the alcohol content of an opponent’s beverage. The reason the definition cannot specify anything about the truth value of the communication is because truths are often told as part of ruses, either to misdirect the attentions of one’s opponents or because one assumes the opponent will take the truth for a lie. This communication can happen through bodily gestures, changing the game-state, or verbal statements. Finally, some ruses are meant to improve an opponent’s position with the ultimate goal of worsening another opponent’s position. Alex, in second place, might improve his position by deceiving Beth, who is in third place, in order for her to harm Chrissy, in first place. In this case, the result could potentially be Alex in first, Beth in second, Chrissy in third. So while the ruse would have helped an opponent (in this case Beth), it still induced an opponent to worsen her position (in this case Chrissy).

Game Trust

Game trust, much like game ruse, is a fickle concept which would do well with a tailored definition, rather than rely on definitions of trust simpliciter (if such a concept could even be defined). For my purposes, if Alex trusts Beth, Alex is said to believe that Beth is not attempting to deceive Alex and that Beth is going to intentionally improve Alex’s position. In other words, to trust someone is to believe that they are not attempting a ruse at your expense when it appears that they are aiding you. Therefore, a precondition for a game with trust is that there are situations which make it reasonable to aid another player, but not necessary.

Diplomacy is a game in which players secretly negotiate with other players, attempting to gain control of Europe. In Diplomacy, trust enables players to act on verbal, non-binding, agreements, such as when Alex tries to take Belgium away from Chrissy, with the support of Beth in Germany. We can say that Diplomacy grants players the opportunity to trust one another because players can write a support command for an opponent who will benefit from it. Players in Diplomacy can trust each other, at times, knowing there are mutual benefits to lasting alliances. One might argue that although alliances might be made, there is in fact no trust, because the game does not forbid players from lying and does not bind anyone to their agreements. However, if players did in fact play like that, it would be equivalent to playing without alliances and we would be forced return to a set of stalemates. Soon, a paradox emerges: how can trust persist even after multiple betrayals across different games and across games into real life? A first answer could be that players never really trust each other in games and that any act that aids an opponent is understood as having an additional motive of eventually helping oneself. However, this answer is somewhat unsatisfactory, given that these games are intended to be played multiple times, especially when one insists that favors be regularly offered for some kind of returned favor, even to people who have historically failed to return their favors. A second answer might relate to the kind of social space which games take up.

Semi-Sealed Game Space

The “magic circle”, often credited to Johan Huizinga,5 but more recently championed by Eric Zimmerman,6 describes the social space of play which is dissociated from the everyday world. This concept was part of a set of conditions Huizinga used to define games. The definition, matching closely with Suits’, goes, “a voluntary activity or occupation within certain fixed limits of time and place, according to rules freely accepted, but absolutely binding, having its aim in itself and accompanied by a feeling of tension, joy, and the consciousness that it is ‘different’ from ‘ordinary life.’”7 With RTBs, the magic circle is helpful for understanding how people can come together, betray one another, and still leave as friends. Or even: how players can continue to trust one another after repeated betrayals among each other over several Entering in the game space, one might be signing a tacit contract with one’s fellow players as to what can be done, what can taken away from the experience, and what can be brought into it. However, these ideal scenarios are not always the case. While initially quite popular, the concept of the magic circle has been refined/attacked several times, perhaps most notably by Dominic Arsenault and Bernard Perron in “Magic Cycle: The Circle(s) of Gameplay,”8 Mia Consalvo in “There Is No Magic Circle,”9 or by Ian Bogost in Unit Operations. Bogost, in an attempt to save games from being considered rhetorically impotent, writes:

Instead of standing outside the world in utter isolation, games provide a two-way street through which players and their ideas can enter and exit the game, taking and leaving their residue in both directions. There is a gap in the magic circle through which players carry subjectivity in and out of the gamespace.10

Arguing that games can change one’s mental state, Bogost challenges one of early game design theorist Chris Crawford’s central tenets of games, safety.11 For Crawford, games are a place where consequences to actions are only simulated, and that this is one of their important points of attraction.12 The problem with this argument is that it is Bogost chooses to undermine Crawford by citing rage as an unsafe result of many games, but with RTBs equally terrible things can spill out into real life. While an RTB might begin with friends or strangers, if this is their first time playing, many will not have formulated firm ideas as to the trustworthiness of the other players. However, after each game, players will learn more about one another from knowledge they gather in-game and take out. Eventually, the more untrustworthy players begin to lose games as none will ally with them, thus breaking the fairness of the game. What is worse, players may infer that the other players are untrustworthy in real life. But while it is necessary for the continuity of play and for the social well-being of players that these games remain hermetically sealed off from the everyday (and often from each other), it is equally important that these games remain able to communicate with their audience.

The bias here is that games are a form of communication and that some games communicate in artful ways. This means that players cannot be allowed to remain safe while inside the magic circle, otherwise they limit the relevance of their experience and the value of a given game-art-work. RTBs need a porous magic circle, one in which players have a communal understanding of what should and should not be taken away. That said, it is now time to look at how designers go about getting players to feel betrayed or to feel like betrayers.

Unknown Agendas

While Suits refers to goals, and Huizinga refers to aims, both appear to speak of a desired result from play that is constrained by a set of rules. For instance, if you want to play golf match with someone else, then you must use a simulator from shop indoor golf and both hold that the desired result is each of your balls in the hole, using fewer strokes than your opponent. With RTBs these desired results are not always symmetrical, as they are in golf, but also games like football, racing or chess. In these games, one can write a single goal which applies to each team/player: score the most touch downs, capture the enemy king, and reach the end of the track before anyone else.

In Bruno Cathala and Serge Laget’s Shadows over Camelot, each player takes the role of a knight from King Arthur’s round table. These players are encouraged by the rulebook to speak in archaic sentence structures and act out as their chosen knight (be it Gawain, King Arthur, Sir Kay, etc.). Each player receives a loyalty card from a deck with a single traitor card inside. The deck is always larger than the number of players so that it is never certain whether there will be a traitor in a given game. While the knights must attempt to place seven white swords on the round table, the traitor must do his best to undermine the round table’s attempts at acquiring white swords, which is to say do his best at surrounding the castle with twelve catapults, killing all the knights, or by placing six dark swords on the round table.13 Before being uncovered, the traitor will secretly harm the round table, usually by pretending that his hidden discards are appropriately low when they aren’t, or that his abilities of foresight are preventing, rather than encouraging, what the knights consider “evil.” However, the game is designed to defeat a traitor who remains passive. The traitor must additionally attempt to have the knights accuse one another of being the traitor for every false accusation leads to a dark sword being lain on the table. Once successfully accused, the traitor takes on a new role, which makes him dangerous in new, but non-secretive ways. This describes only a small portion of game which would take several pages to explain, but these mechanics essentially enable the game to retell a tale similar to those of the Vulgate Cycle. This text recounts the evil deeds of Morgan Le Fey, her denouncing of Lancelot’s betrayal and his quest for the Holy Grail.

For the players to beat the game, they usually need to perform a combination of feats which involve defeating Lancelot, finding the Holy Grail, repelling the Saxons/Picts, defeating the dragon, dueling the Black Knight and consistently preventing the evils of Morgan Le Fey. One accomplishes this by collecting and using cards with certain abilities. The players must trust one another to make the right decisions, which often have repercussions and opportunity costs known only to player making them. Midway through the game, players are allowed to begin accusing one another in an attempt at revealing the traitor. Failure to do so either indicates that there isn’t a traitor in the game, or misplaced trust on behalf of the non-traitors and successful ruse on behalf of the traitor. Trust is only possible because there is a possibility, and not a certainty, that multiple players are working towards the same goal. As a result, betrayal is probable in Shadows Over Camelot. Combined with movements and plays which mimetically or thematically relate to the world of Camelot, the game builds an emergent fiction from the ground up with asymmetrical goals.

Unattainable Goals

Another method of structuring RTBs is to make it so individuals cannot attain their goals without help. If we look at Diplomacy¸ we can say that good players are forced to trust one another and form an alliance. Without this kind of communication between players, Diplomacy will likely generate multiple stalemates, as one cannot defend the number of fronts one has, let alone push them forward. To try and do so would turn the game into guesswork as players must attempt to foretell which units will move in which direction. Therefore, alliances necessarily form around players who rationally want to win and have the game reach its conclusion. Regardless: should an alliance become more of a nuisance than a benefit, such as when the only opponents left are impractical to attack, or when the game has only a single opponent left, then a break in trust proves necessary. No longer can either player trust the other. It becomes practical to betray before one is betrayed. The same can be said of Kevin Wilson and Christian Petersen’s A Game of Thrones, in which play is nearly identical. Instead of writing down orders with specific attacks and supports, however, players give orders to their units to behave certain way (defend, march, raid, support, consolidate power), which offers flexibility in the face of a betraying ally. And while it is rare for players to support one another in the same way, people will often need to make cease fire agreements so that they can leave claimed territory undefended to press an attack elsewhere. In Battlestar Galactica, the need for teamwork behaves differently. A prominent mechanic is the “executive order” which allows a player to forgo their once-per-turn action to grant another player the opportunity to take two actions (with the only restriction being that they cannot “executive order” anyone else). However, while doubling the effectiveness of their turn, it is not always certain that they are ordering someone with the same agenda. If it were unnecessary to play executive orders, people wouldn’t, but because the game has goals which are too difficult to attain individually, players are yet again forced to trust one another.

Soft Mechanics

Before continuing to the final element of RTBs, the term “game mechanics,” as previously mentioned, will need some quick refinement. Here I build off of Miguel Sicart’s definition of these “as methods invoked by agents, designed for interaction with the game state.”14 The important thing to note here is that he is working mainly with video games such as Gears of War, and although his text indicates that this definition should work for board games, certain clarifications are required. The first is that, although players’ will convince, manipulate, ally with, or betray each other, these are not game mechanics in any of the games mentioned so far. No token or indicator on the board or cards of these games will ever indicate the state of these kinds of player relations in the game. In addition, the game state is not argued to extend to the minds of the players, and while that is certainly one route that could be further explored for some games, it is impossible for RTBs. This impossibility stems from the way ruses work. Although players will have representations of the game state in their minds, players will attempt to manipulate these, discredit them or take advantage of mistakes already made inside them. The only time a mind will hold a game state in an RTB, is in a situation like Werewolf in which a neutral person will facilitate the gameplay without playing the game themselves. The relevant mechanics of these games are instead the means with which players can act on their understandings of their relations with other people. Sicart writes, “Game mechanics are concerned with the actual interaction with the game state, while rules provide the possibility space where that interaction is possible, regulating as well the transition between states.”15 This statement leaves a clear gap where ruses occur, somewhere inside the space provided by the rules, but outside the actions provided by the game mechanics. This is not to say ruses cannot be caused by changing the game state and having opponents infer information from those changes, only that it is conceptually impossible for trust or ruse to be methods which can be called on. Neither is this to say that ruses or and alliances are at the meta-game level; without player unpredictability elements, there would be neither a Diplomacy nor a Shadows over Camelot. For our purposes, we might refer to these as soft mechanics, which do not change the game state, but a person’s perception of it. These are not methods which are designed by the game maker, but methods which pre-exist socially and are assumed to continue existence even when inside the magic circle.

Falsifiable Information

Soft mechanics seem to thrive in RTBs, given the sheer amount of falsifiable information. Because these games do not enforce verbal agreements and encourage secrecy, illocutionary acts can make use of soft mechanics, given that they are not allowed to make changes in the game-state. Player A’s comments cannot be guaranteed by the game, so when Player A asks player B for a favor, explaining that he will return it moments later, Player A is free to disregard her promise without penalty. To make agreements binding would turn soft mechanics into plain mechanics because the game state will have changed. What is more, this would reduce the importance of trust, or even negate it, given that players could not be lying without cheating. Corey Konieczka, with Battlestar Galactica, does something similar to Cathala and Laget, using the same loyalty card system. In order for either of their mechanics to work, their rulebook’s each have a section on secrecy, with nearly the exact same rules, where Knights could be replaced by humans and traitor by Cylon:

Collaboration with other like-minded Knights in your group will often turn out to be crucial to winning.

A few rules must thus be observed at all times, when conferring with your fellow Knights.

Declarations of intent can be made freely; resources and capabilities can all be discussed openly, as long as your comments are general and nonspecific.

However, you must never reveal or discuss the explicit values of cards in your hand, or volunteer any other specific game information not readily available to your fellow players…. It is permissible to lie about your intent or your resources at hand (a particularly useful ploy for a Traitor), though you must never cheat.16

However, the Battlestar Galactica rulebook adds a unique section to explain that the secrecy retains a purpose in maintaining the proper ambiance,

A key element of Battlestar Galactica: The Board Game is the paranoia and tension surrounding the hidden Cylon players. Because of this, secrecy is very important, and the following rules must be observed at all times…17

It should be noted that these rules are explained as means of inducing aesthetically relevant emotional content.. Players take on the roles of those responsible for Galactica’s everyday function. However, just like the television series, not everyone is who they appear to be. Rules about revealing information and lying meet their theme successfully. Pearce writes, “In the ideal case, the play mechanic is synonymous with the narrative structure; the two cannot be separated because each is really a product of the other.”18 In this case, the soft mechanics of communication and secrecy, combined with a necessity for cooperation with people who might be against you, mesh with the desired fiction. One understands how to be an effective game-traitor by understanding how fictional and real traitors operate. However, to act as a game-traitor will often provide insights into lying and deceit, effectively communicating how these operate in given situations.

It is important that the fictional elements of the board game’s architecture not be understated. In “Game Design as Narrative Architecture,” Henry Jenkins argues that virtual game spaces elucidate facts about a fiction by the way they are constructed. He theorizes four distinct ways in which architecture tell stories. While it is likely that each of these can be found operating in board games, there are three in particular which interest me here. Firstly, as an “Evocative Space,” a video game, and I would argue board games as well, through its depictions and cultural associations with pre-existing narratives, evokes shared ideas about how the world represented in the game functions and who inhabits it.19 With Shadows over Camelot, we are brought to think about the Arthurian legends; with Battlestar Galactica, the fiction of the television series, and so forth. Games come packaged with a story that players already know, but upon which the fiction can be developed. Should players be ignorant of these narratives, then it stands to reason that they will lose out on much of the content and context. Secondly, Jenkins discusses “Emergent Narratives” where he explains that characters in The Sims “are given desires, urges, and needs, which can come into conflict with each other, and thus produce dramatically compelling encounters.”20 The same, of course, is happening in RTBs where multiple agendas and forced interaction generate their own encounters. Players first take in the fiction which is evoked and then play out an unscripted, but dramatically charged initial position. There is a blurring here as emergent narratives tend to be the result of a third narrative form, “Enacted Stories.”21 Players, through their actions and participation, generate the actions of their avatars or of a given system. So when a player makes a move, which subsequently supports an opponent’s position, we can say that in the fiction of this playing of the game, the narrative unfolded in such a manner.

Conclusion

Needless to say, this work is somewhat ambitious in attempting to touch on so many topics and their relations to one another. While it delineates certain formal definitions of what it would mean to trust and trick in games, it also makes attempts at demonstrating both how fictions emerge from play and how these emerging fictions add aesthetically interesting dimensions to fictional content, in particular, by engaging players to experience a version of the fiction first hand. With RTBs, it was shown that the rules which regulate initial situations must conflict with the desired results of each player. Games that have players take on avatars from a fictional world with multiple hidden agendas, pose tasks dependent on co-operation and require secret/falsifiable information will procedurally generate a fiction of betrayal. Bogost explains that there is unavoidable, ancillary, unintended, or accidental politics inscribed in games: “The objective simulation is a myth because games cannot help but carry baggage of ideology.”22 However, it is also possible that these politics could be intended. Just as one can make a city-building simulator which can favor libertarian policies by collapsing cities that tax above low thresholds, one can simulate a fictional world which favors trust and betrayal.

–

Featured Image “Venus blindfolded” by Mirko Tobias Schäfer

–

William Robinson is a PhD candidate in the Humanities program at Concordia University. He is on the board of the Digital Games Research Association and an active member of the Center for Technoculture, Art and Games, where he completed his Master’s thesis on the aesthetics of game play in 2012. His theoretical work focuses on serious game design, the political-economy of game creation, media archaeology, digital humanities, e-sports, toxic gamer culture and procedural rhetoric. His art practice deploys strategy games as political arguments. His doctoral work on Montreal’s early 20th century labour movements explores the possibilities for game design, as historiographical research-creation.