As is, platform studies has nothing to say about analog games.

When Richard Garfield invented tapping—a game act in Magic: The Gathering (1993) wherein a player rotates a card sideways to indicate use—he did not discover nor invent a new physical feature or process within a playing card or cards, nor did he invent some new way to physically modify a card.

He recognized that it was convenient and useful to appropriate a card’s orientation as having a certain significance; that orientation could be used to indicate a logical state for use in a logical process. Tapping is a brilliant design decision because the act is easy for people to perform, the result is clearly observable, and the binary state that the rotation signifies is sufficient for creating conditional logic such as ‘creature cards can block, if not tapped’. The act also makes the card text more difficult to read—a nice parallel to removing an item from the player’s domain of influence.

There are several lessons here: (1) The card doesn’t compute, the person computes. (2) The person performs the computation by appropriating an otherwise continuous feature of the world. (3) The very act of performing the computation has experiential side effects. And so it is that people constitute a unique computing system, e.g. a unique platform.

Platform Studies

With regards to electronic computing, Ian Bogost and Nick Montfort make several observations: computer systems exist in standardized forms, or platforms; each platform affords designers particular computational opportunities; these opportunities influence the character of the artifacts made for the platform; and lastly, these relationships were hitherto overlooked.1 For example, the stringent memory constraints of the Atari VCS made pseudo-random number generation prohibitive (even the small space an algorithm would take up was valuable), but the system’s instruction set allowed access to the bytes used in running the game code. So for Yar’s Revenge (1981), Howard Scott Warshaw used data from unrelated instructions that the machine was running to create the kind of pseudo-random data needed for drawing the game’s “neutral zone”. As Montfort and Bogost state, “When the player looks at the neutral zone on the screen, he is also literally looking at the code.”2 This kind of technical perspective can reveal hidden authorial fingerprints and allow us to better understand such games in relation to similar artifacts.

Bogost and Montfort’s observations form the basis of the ongoing and successful MIT Press book series Platform Studies. Each book concerns how developers cope with a set of computational capabilities and the effects of this coping on the procedural artifacts that depend on that platform. The underlying premise being that we can better understand digital artifacts qua authored entities if we better understand the unique technical conditions under which they were created.

But whether because game systems are the more recognizable platforms,3 because of some affinity between games and computers,4 or for some other reason entirely, computer games are a prominent subtopic in platform studies literature. This has led Nathan Altice (2014)5 and Jan Švelch (2016)6 to consider what Bogost and Montfort’s perspective might contribute to the study of analog games.

However, their responses take liberties with how platform is construed. Their paths diverge over the topic of computation; Švelch jettisons this aspect of platform studies altogether. These pose equally divergent questions for future analog platform studies. Although each of them makes insightful observations, they each admit different complications. I propose that these can be avoided and other insights gained by branching in yet another direction; one that emphasizes computation even more than Altice does; and one where computational capabilities are situated in people, not materials. In short, accepting that computation need not be done by technology, people’s ability to compute qualifies people as platforms.

This notion has at least two precedents. Designer Tim Fowers used a people-as-platform metaphor to introduce a room full of game programmers to board game design at IndieCade.7 His framing was similar to that of Samara Hayley Steele who states that “aggregate larp rules are a type of code that runs on humans.”8 However, I am not advancing an argument about whether or not rules are a kind of code, or as Steele goes on to claim, that programming languages are like human languages (or vice versa). My point is more plain: People really are a computing platform because people really do compute. By what means people share, learn, and discus game algorithms, instructions, or “code” is no doubt an important topic. But there are more pressing matters here: What human capabilities allow for anything like “human code” to exist in the first place? How do these capabilities affect design decisions and the experience of playing games that utilize these capabilities? In sum, how is an analog platform study possible without equivocating on platform study’s fundamental concepts?.

What gives?

As is, platform studies has nothing to say about analog games. Bogost and Montfort define platform as “a computing system of any sort upon which further computing development can be done.”9 But their notion of “any sort” shouldn’t be taken too liberally; they clearly constrain their subject matter to electronic artifacts through frequent references to hardware, software, and digital media:

“The hardware and software framework that supports other programs is referred to in computing as a platform.”10

“Computational platforms, unlike these others, are the (so far very neglected) specific basis for digital media work.” 11

“The [Platform Studies] series investigates the foundations of digital media: the computing systems, both hardware and software, that developers and users depend upon.”12

Technologies such as cards, dice and little wooden blocks are not electronic artifacts; they cannot themselves compute; they cannot execute algorithms. Strictly speaking, we could define analog games as those games that exist sans platform. So something must give in order to do an analog platform study (at least in spirit). Altice and Švelch provide two examples. Each raises useful points and suggests very different directions for future analog platform studies. Moreover, they admit different (and interesting) complications that future studies should either address or avoid.

Altice frames playing cards as a platform. He discusses the historical, social and technological contingencies of cards, their use in contemporary games and the consequences of their attributes on game design decisions. He makes an effective argument that cards’ material attributes provide the kinds of capabilities and constraints on game authorship that platform studies seeks to explicate. His approach is clearly in the spirit of platform studies.

But in order to talk about cards in this way, Altice takes a tricky position that entails some undesirable consequences. He simultaneously proposes that cards are relevant to platform studies because of their computational capacities but that it is their non-computational qualities that are relevant. Altice begins by describing Richard Garfield’s tapping mechanic as like a “processor upgrade” and in so doing seems to bridge the gap between platform studies’ computational concerns and analog media.13 But Altice soon leans away from this metaphor. After conceding that a computational framing carries certain (unnamed) risks, he argues that the more important underlying topic of platform studies is material constraints. This shift burns the computational bridge from platform studies in the first place, or it denies the need to build such a bridge at all. The first issue would undermine his claim to being a platform study, the latter is tenable but creates a significant problem. If platform studies are foremost about material constraints, then the topic space is unbounded. Appropriate platforms could include rocks, tables or cold rolled steel. Perhaps this is one of the unspoken risks that Altice alludes to—if we can take cards as computational, why not everything else?

In contrast, Švelch disapproves of the computational aspect of platform studies all together. In place of Bogost and Montfort’s stipulated definition of platform, Švelch substitutes a broader version from Tarleton Gillespie.14 His view is that platform should refer to a structure that allows people to “communicate, interact, and sell,” as opposed to a technology that runs programs. Švelch then uses this perspective to discuss how the collectable card game Magic: The Gathering qua Gillespie-platform facilitates trading, card modification and other community practices. His work provides a look at how an existing game can spur interrelated meta-game activities that a computational sense of platform studies might fail to explain or address.

Švelch’s specific target is a good one. He aims at platform studies’ “analytic of layers”—the taxonomy of related scholarly work that Bogost and Montfort describe in order to situate their undertaking. These divisions are worth considering further because they can be critiqued even while accepting the computational sense of platform. For example, we could note how platform affordances15 are not properly located in the computing system itself. Even in Montfort and Bogost’s inaugural platform study of the Atari VCS,16 it’s clear that the console’s capabilities expand over time due to the growth of shared knowledge about tricks and techniques. In this way, the constraints of the platform cannot be cleanly separated from the community of practitioners. Whether or not developers are “using,” “discovering,” or “inventing” a platform’s capabilities is an interesting question worth further consideration.

Despite Švelch’s interesting argument, if we pursued the direction that he advocates, we’d need a new name for the line of inquiry previously known as “platform studies.” To be clear, Švelch’s approach prima facie is a problematic basis of Bogost and Montfort-style analog platform studies because it is based on a definition of platform that Bogost and Montfort have explicitly considered and dismissed.17 Under the section “Misconception #4: Everything These Days Is a Platform” they write:

“If [Gillespie] reads the computational sense of “platform” as outdated, this view is not at all tenable… Current video game developers, for example, have a very clear idea of what “platform” means, and they use the term in the same way that we do… The sense of a platform as a computational platform…is certainly, overall, the most relevant one in the history of digital media.”

And while Švelch’s stated goal is to strengthen their perspective, his revisions are substitutive, not additive. His emphasis on community practices obscures the kind of questions that platform studies was invented to address in the first place, e.g., what were the computational constraints and their influences on Garfield’s original design of Magic: The Gathering? How does this game relate to other games designed within the same constraints?

Yes, these questions in their strict form have a fundamental issue in assuming that analog games are contingent on computational constraints, and I will deal with this below. However, Švelch’s critique is aimed at platform studies as a whole and thus represents a different concern—that it is not advisable to apply platform studies as is to analog games because of an inherent flaw.

The argument behind this charge is problematic. Švelch goes too far in framing the topic’s limits as a defect. He argues that platform studies has a “blind spot” in that it implicitly prioritizes technical aspects—but this prioritization is explicit. Bogost and Montfort explicitly advance a computational sense of platform. They are clearly interested in the computational capabilities that a developer contends with when crafting procedural artifacts. The fact of this emphasis and its centrality to platform studies is independently noted by Thomas Apperley and Darshana Jayemane.18 They state that the value of platform studies is that the focus on computer systems provides a novel yet stable basis for investigation. If this grounding is to prioritize technical aspects, then so be it; it is just the nature of studying computational craft. Whether or not these investigations dovetail into community practices or any other topic is immaterial.19 Platform studies is not game studies with a different name.

People as platforms

In contrast to Altice and Švelch, I am willing to bend on platform studies’ technology clause. Bogost and Montfort have the right of it when they emphasize platforms’ computational idiosyncrasies. But while computation may be essential to platform studies, technology is not essential for computation.

People also compute. People can perform algorithms. People can read and enact algorithmic instructions. Analog game design is contingent on human algorithm enactment capabilities. Therefore, in the absence of the technology clause, people qualify as a platform (or a category of platforms). This statement has several unintended implications which I will dismiss presently before elaborating on three important facets of the human platform. These include: (1) experiential side effects; (2) computational appropriation; and (3) the ability to perform non-algorithmic processes.

I am not suggesting that analog platform studies should frame people as nothing other than algorithm enactors. Nor am I presuming or advocating for a computational theory of mind.20 Nor am I claiming that gameplay or games are essentially computational. I am only pointing out that humans can perform algorithms in addition to (or by means of) all of their other capabilities, and that these sets of operations alter the stakes around claims related to analog platform studies.

I also do not mean to suggest that analog platform studies should be, foremost, a digression into cognitive science. I take the ultimate goal of a platform study to be a better understanding of the ways in which specific computational characteristics indirectly influence the kinds experiences we have with the procedural artifacts that depend on the platform in question. And while cognitive facets like short-term memory limits and the speed at which people can perform arithmetic are surely relevant, there are features to the human platform that are more consistent to every person and more fundamental to the experience of analog gameplay. Let us turn to them now.

- Experiential Side Effects

Human computation is experienced from the first person; the process has a phenomenological dimension to it. For people, there is something that it is like to perform an algorithm. There are experiential side effects. For example, the children’s game Snakes and Ladders and the folk card game War are experientially distinct, despite the fact that formal descriptions of both games involve the same amount of player input, namely: none at all. Similarly, drawing a random card from of a stack of six is experientially distinct from rolling a die even though each method of randomization is statistically equivalent. More interestingly, good enough results can be had by flipping three coins. While this method can theoretically produce an endless sequence of no-results (zero or seven), the occasional no-result can intensify drama by delaying resolution.

More importantly, experiential side effects can impact a game’s formal processes despite the game’s formal description not covering experiential features. This is evident in cases of bluffing, where the challenge is to avoid expressing experiential side effects. There are no instructions in Poker that describe the game significance of feelings or expressions yet these phenomena play a pivotal role in how the game state changes. Experiential side effects can lend additional complexity to analog gameplay. Designers are wont to introduce or eliminate this complexity as desired.

If platform studies is about how computational capabilities indirectly affect our experiences with procedural artifacts then this facet may be the most important. The experience of analog gameplay does not begin with some kind of encounter with an abstract formal process; it includes the experience of instantiating those very processes.

- Computational Appropriation

Components do not compute, players compute using components. When humans play analog games they appropriate objects in order to perform computations. By this, I mean that people compute by way of assigning formal significance to some features of the world. The rotation of a card means this, the shape of a hand means that, and the size of a pile of little wooden cubes means nothing at all—unless we say otherwise. In this way, the computational constraints and opportunities that analog game designers face proceed from the human ability to assign significance and not just from the physical attributes of materials.

This perspective is rooted in a broader view expressed by John Searle21 and in some capacity by Ned Block,22 John Bishop,23 John Preston24 and Schweizer and Jablonski25—that whether or not some process is a computation depends on whether or not we attribute to it the status of it being a computation.26

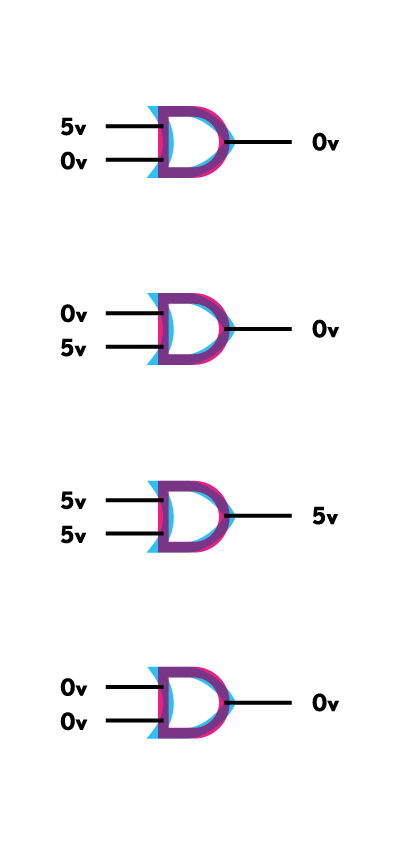

To borrow and extend an example from Searle, we could appropriate a house window for doing computation by assigning a 0 to it being open and a 1 to it being closed. But this assignment would not describe a physical fact about the window nor would it have any bearing on a breeze that comes through. Inversely, there is nothing inherent in the window’s many variable properties (openness, cleanliness, temperature, shuttered-ness, etc.) that would allow us to discover that the window is computing, let alone what, exactly, it may be computing. This assignment dependence is especially apparent in cases where the exact same physical processes can be said to be simultaneously performing two different computations. As Block and Bishop point out, there is no physical difference between a circuit that implements a logical OR operation and one that implements a logical AND operation except for which voltage is assigned which digital value.27 For some thing to be part of a computational process, some significance must be intentionally assigned to an arbitrarily limited set of its physical features or relationships.28

I must concede that the perspective here could also apply, with problematic results, to the digital computing systems that Bogost and Montfort are interested in. By such an account, even electronic systems would be computational only insofar as we judge their behaviors to be the correct implementations of some algorithms. The potential validity of such a stance is hinted at in the Yar’s Revenge example: The machine behavior that Warshaw used to generate a random visual texture was only a component of a pseudo-random generation algorithm insofar as Warshaw appropriated it as such. This leads us back to Altice’s problem—if humans are necessary for something to be a computation and if any observable feature can be appropriated for computation then is it not the case that platforms are solely defined by their specific material qualities?

To this I can only reply that the case of a person computing by moving around a few dozen inert objects is notably distinct from a person that computes with a billion man-made electric transistors perpetuating their own state changes. In the latter case, the machines are so complex and so opaque to their users that the study of the machine is worth emphasizing, but in the former, the peculiarities of a person’s role in the computation are much more salient.

This brings me to another point in favor of Altice’s interest in materials—even though a specific set of features might implement different computations, it doesn’t follow that these specific features can implement any computation. As Block notes, even though the same circuit could implement different operations there are many operations that that circuit cannot implement.29 Similarly, a designer can appropriate a pair of dice for many different algorithms, but they cannot appropriate these dice for any algorithm. Moreover, the features that designers can appropriate are limited to what players can conveniently observe. For example, the temperature gradient of a playing card is not a good candidate for appropriation because players have no way of observing this gradient under normal conditions. This means the potential computations we can perform are partly constrained by the components at hand. And a person with a 52 card deck of western playing cards and a table could be said to constitute a sub-category of the person platform.

But while a set of components may constrain potential algorithmic procedures, we must be careful not to privilege the material as the source of the computation. This is imperative for understanding the imaginative work that analog game designers do. In the case of Richard Garfield’s aforementioned tapping innovation, he neither discovered nor invented some new physical feature or process, he invented a novel appropriation. This fact of appropriation also provides a route of imbuing analog games with cultural significance—we can appropriate culturally significant entities. Poker can be played with chips, cash, or chore obligations. We can appropriate his cards, her valuable cards, or his valuables. In these cases, the components obtain a kind of polysemy or multi-stable meaning. How these intersecting significances might create more complex meanings and experiences is surely an experiential side effect for game designers to consider.

- Non-algorithmic processes

Finally, not all analog game processes are algorithms. The human platform is uniquely capable of supporting gameplay that incorporates other kinds of activity. In other words, the set of algorithms that a person can reasonably perform does not exhaust the set of processes a person can enact. By extension, analog game designers do more than define algorithms.

For the moment, let us accept Juul’s intimation that the possible rules of a game played on a computer are exactly those rules that are algorithms.30 This provides clear examples of uniquely human instruction following capabilities. To clarify what does not constitute an algorithm, Juul borrows Donald Knuth’s example of a recipe. A recipe, he notes, requires knowledge about the domain beyond the instructions. (For example, how much is a “pinch” of salt exactly?) Because an algorithm must be useable without understanding, he says, the recipe does not qualify as an algorithm. However, we can easily include this kind of not-algorithm in an analog game. For starters, we could attach the goal “prepare the recipe in less than x minutes”. We would then have a game with a non-algorithm instruction. Here is another example from the existing game Apples to Apples (1999):

“The judge … selects the one [red apple card] he or she thinks is best described by the word on the green apple card.”

This instruction requires understanding from outside the formal system in order to cope with the qualifier “best described” (not to mention a sense of humor).

This raises interesting questions that future analog platform studies should consider: If analog game processes need not be algorithmic, then are algorithmic procedures necessary for analog game play at all? But assuming that algorithmic and non-algorithmic procedures coexist in many cases, are there consistent ways in which they interact? It will be useful (if not necessary) to better understand how our computational and non-computational abilities intersect if we want to understand how design decisions are affected.

In closing, people can perform algorithms. The features of human computing capabilities affect analog game design. Human computing is novel in terms of how it affects analog gameplay experience; it is experienced from the first person and it is contingent on our ability to see things as having formal significance. But while our algorithmic capabilities admit us to the platform category our relevant capabilities are not constrained to algorithms. And while people’s capabilities are certain to vary greatly (by age, experience, knowledge and so forth) the facets mentioned here—experiential side effects, computational appropriation, and the potential for performing non-algorithmic instructions—are consistent to everyone.

–

Featured image is used with permission by the author.

–

Ian Bellomy is an assistant professor of Communication Design within the college of Design, Architecture, Art and Planning at the University of Cincinnati. He mostly teaches interaction design but also teaches a studio on board game design. An interest in accurate and useful descriptions of interactivity led him to the Savannah College of Art and Design where he received a MFA in Interactive Design and Game Development. He recently released Hinges, a simple puzzle game for iPad.